Scrape data of Moroccan House of Representatives.

Project description

Welcome to barlaman

A Python library to Scrap raw data from the Moroccan Parliament (House of Representatives) website.

Requirements

These following packages should be installed:

- BeautifulSoup & requests for web scrapping

- pdfplumber for pdf scrapping

- arabic_reshaper & python-bidi to work with arabic text

- re for regular expressions.

- json & os to work with JSON files and folders.

Install (NOT YET)

pip install

Running the scraper

I. Scrape the schedule :

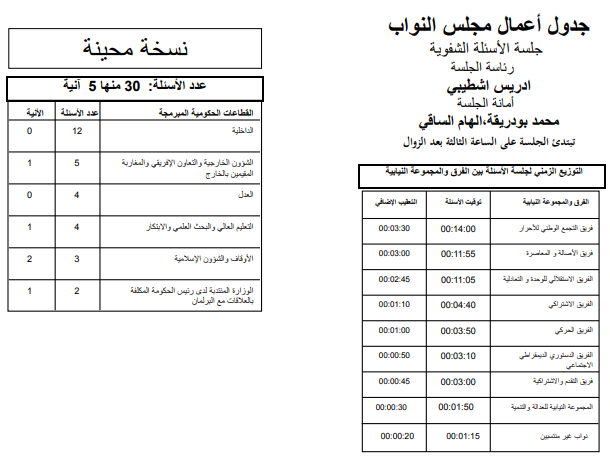

Each Monday, and in accordance with the Constitution, the House of Representatives convene in a plenary sitting devoted to oral questions. Before the sitting, the House of Representatives publishes the schedul of the sitting (pdf file in arabic). The first page of this document (the schedule) contains the following data (see picture below) :

- Time allocated to parliamentary groups

- Ministries invited to the sitting

Illustration

To get the schedule use the function : getSchedule(path) where path is the document path

path= ".../ordre_du_jour_30052022-maj_0.pdf"

schedual = getSchedule(path)

The output schedual is a tuple of 3 dictionary with the following keys :

- Dict 1 :

- "President": The president of the session

- "nbrQuestTotalSession" : Total nbr of questions

- Dict 2 :

- "nomsMinister" : Name of ministers present in the session

- "numsQuest" : Total Number of question per minister

- "numQuestU": Number of urgent (أنى) question

- Dict 3 :

- "nomsGroup" : Parliament groups

- "quesTime": Time allocated for each group

- "addTime" : Time for additional comments

We can store these dictionaries into DataFrames :

# Turn Dict 2 into a DataFrame

import pandas as pd

pd.DataFrame(schedual[1])

II. Scrape sitting's questions :

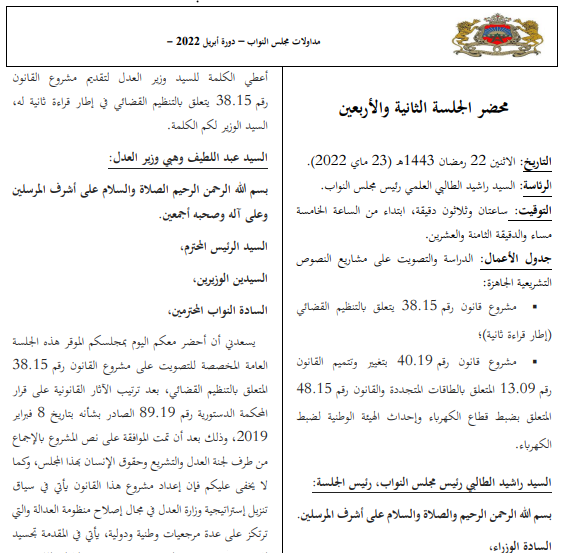

The remaining pages of the schedule document contains :

- Number of questions for each Ministry

- Questions asked during the sitting, who asked these questions and time allocated to each question

*illustration

To get these question tables use the function : getQuesTable(path) where path is the document path

path= ".../ordre_du_jour_30052022-maj_0.pdf"

question = getQuesTable(path)

The output question is a dictionary of dictionaries whose keys are the the names of ministeries present at the setting.Inside of each dictionary there is a dictionary with the following keys :

- "timeQuest": Time allocated for each question

- "parlGroup": Parliamentary group

- "txtQuest": The question

-

- "typeQuest": Question type

- "codeQuest": Question code

- "indexQuest": Question index (order)

- "subjIden":questions with the same subjIden means the the questions have the same subject (identical subject)(e.g. وحدة الموضوع)

Example

{

'ﺍﻟﻌﺪﻝ': {'timeQuest': ['00:02:00', '00:02:00', '00:01:40', '00:01:35'],

'parlGroup': ['ﻓﺮﻳﻖ الأﺼﺎﻟﺔ ﻭ ﺍﻟﻤﻌﺎﺻﺮﺓ',

'ﻓﺮﻳﻖ الأﺼﺎﻟﺔ ﻭ ﺍﻟﻤﻌﺎﺻﺮﺓ',

'ﺍﻟﻔﺮﻳﻖ الاﺸﺘﺮﺍﻛﻲ',

'ﺍﻟﻔﺮﻳﻖ الاﺴﺘﻘﺎﻟﻠﻲ ﻟﻠﻮﺣﺪﺓ ﻭ ﺍﻟﺘﻌﺎﺩﻟﻴﺔ'],

'txtQuest': ['ﺗﻮﻓﻴﺮ ﺍﻟﺘﺠﻬﻴﺰﺍﺕ ﺍﻟﻀﺮﻭﺭﻳﺔ ﺇﻟﻨﺠﺎﺡ ﻋﻤﻠﻴﺔ ﺍﻟﻤﺤﺎﻛﻤﺔ ﻋﻦ ﺑﻌﺪ',

'ﺗﻨﻔﻴﺬ الأﺤﻜﺎﻡ ﺍﻟﻘﻀﺎﺋﻴﺔ ﺿﺪ ﺷﺮﻛﺎﺕ ﺍﻟﺘﺄﻣﻴﻦ',

'ﺧﻄﻮﺭﺓ ﻧﺸﺮ ﻭﺗﻮﺯﻳﻊ ﺻﻮﺭ الأﻄﻔﺎﻝ ﺃﻭ ﺍﻟﻘﺎﺻﺮﻳﻦ',

'ﺇﺷﻜﺎﻟﻴﺔ ﺍﻟﺒﻂﺀ ﻓﻲ ﺇﺻﺪﺍﺭ الأﺤﻜﺎﻡ ﺍﻟﻘﻀﺎﺋﻴﺔ'],

'typeQuest': ['ﻋﺎﺩﻱ', 'ﻋﺎﺩﻱ', 'ﻋﺎﺩﻱ', 'ﻋﺎﺩﻱ'],

'codeQuest': ['1505 ', '2386 ', '2413 ', '2829 '],

'indexQuest': ['22', '23', '24', '25'],

'subjIden': [' ', ' ', ' ', ' ']}

}

III. Setting's transcript

After a setting, the Parliament published a pdf file of the setting transcript in arabin containing all debates that took place during the setting. The unique content, structure and language of records of parliamentary debates make them an important object of study in a wide range of disciplines in social sciences(political science) [Erjavec, T., Ogrodniczuk, M., Osenova, P. et al. The ParlaMint corpora of parliamentary proceedings] and computer science (Natural language processing NLP) .

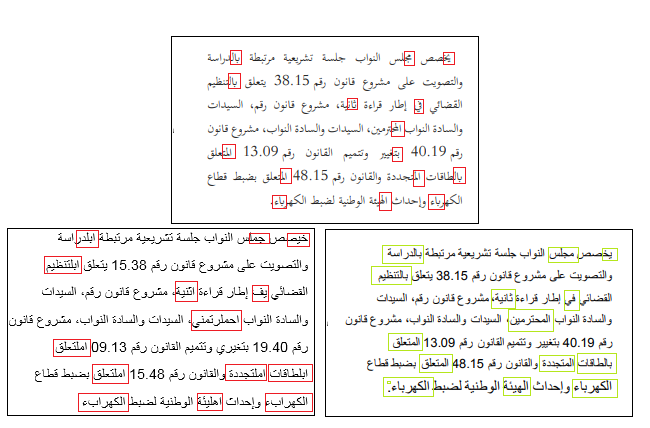

Illustration : Transcript

Unfortunatily we can't use the transcript document as it is provided by the parliament website because the document is written with a special font. In fact, if we try to scrape the original document we get a text full of mistakes (characters not in the right order).So we must change document's font before using it. See the picture bellow :

- text in top is the original document.

- text in bottom left is when we scrape the original document

- text in bottom right is when we scrape the original document after we changed its font

Until now there is no pythonic way to change the font of the document (at least we are not aware of its existence,especialy that it's in arabic. All suggestions are most welcome). So we are doing the the old way : using Microsoft Word.

To get the raw transcripts use the function : getRawTrscp(path,start=1,end=-1) where path is the document path, start is the number of the page from where you want to begin extracting text (The first page is 1 not 0!) and end is number of the page where you want to stop extracting text.

path=".../42-cdr23052022WF.pdf"

transcript=getRawTrscp(path)

The output is a dictionary of dictionaries in the form of : {.,'page_x':{"page":page,"rigth":right,"left":left},.}

where :

- page_1: page number (e.g.page_x is page number x)

- page : the text of page_1

- right : the text of the right side of page_x

- left : the text of the left side of page_x

IV. Deputy data and parliamentary activities

Each deputy has a section at the parliament website where we can find the following data :

-

General info about the deputy : Name, Party, Group, District ...

-

Parliamentary tasks:

-

Deputy Agenda:

-

Deputy Questions: Total number of questions and for each question we can find the folliwing details :

+ Question date + Question id + Question title + Question status : if the question received a response or not + Date Answer + Question text

To get those infotmation use the function :getDeputy(url,include_quest=True,quest_link=False,quest_det=False) where

url: link to the deputy link (to get url of deputies use getUrls())include_quest(default=True): True to retuen data about deputy questionsquest_link(default=False) : True to include links of each questionquest_det(default=False): True to include question details (question text, Date Answer ...)

# to get a list of url of deputies use getUrls()

url="https://www.chambredesrepresentants.ma/fr/m/adfouf"

# We can have three scenarios

first=getDeputy(url)

second=getDeputy(url,include_quest=True)

tird=getDeputy(url,quest_link=True,quest_det=True)

The output is a dictionary with the structure below :

{"Nom":name,"description":desc, "task":task,"Agenda":agenda,"Questions":Quests}

In first scenario we get :

{'Nom': {'Député': ' Abdelmajid El Fassi Fihri'},

'description': {'Parti': "Parti de l'istiqlal",

'Groupe': "Groupe Istiqlalien de l'unité et de l'égalitarisme",

'Circonscription': 'Circonscription locale',

'Circonscription_1': 'Fès-Chamalia',

'Legislature': '2021-2026',

'Membre des sections parlementaires': ' Commission Parlementaire Mixte Maroc-UE '},

'task': {'Commission': "Commission de l'enseignement, de la culture et de la communication"},

'Agenda': {'Agenda_1': {'heure': '15:00',

'evenemnt': 'Séance plénière mensuelle des questions de politique générale lundi 13 Juin 2022'},

'Agenda_2': {'heure': '10:00',

'evenemnt': 'Séance plénière mardi 7 Juin 2022 consacrée à la discussion du rapport de la Cour des Comptes 2019-2020'},

'Agenda_3': {'heure': '15:00',

'evenemnt': 'Séance plénière hebdomadaire des questions orales lundi 6 Juin 2022'},...}},

'Questions': {'NbrQuest': ['12'],

'Dates': ['Date : 20/04/2022',

'Date : 04/03/2022',..],

'Questions': ['Question : ترميم المعلمة التاريخية دار القايد العربي بمدينة المنزل، إقليم صفرو',

'Question : المصير الدراسي لطلبة أوكرانيا'..],

'Status': ['R', 'NR', ..],

'Quest_link': [],

'Quest_txt': []}}

In the third scenario,'Quest_txt' is different than [] and we get:

'Quest_txt': [{'Nombre Question': ' 3475',

'Objet ': ' ترميم المعلمة التاريخية دار القايد العربي بمدينة المنزل، إقليم صفرو',

'Date réponse ': ' Mercredi 8 Juin 2022',

'Date de la question': 'Mercredi 20 Avril 2022',

'Question': 'صدر مرسوم رقم 2.21.416 ( 16 يونيو 2021) بالجريدة الرسمية عدد 7003 بإدراج المعلمة التاريخية "دار القايد العربي" بمدينة المنزل بإقليم صفرو في عداد الآثار، حيث أصبحت خاضعة للقانون رقم 22.80 المتعلق بالمحافظة على المباني التاريخية والمناظر والكتابات المنقوشة والتحف الفنية والعاديات، وأكد صاحب الجلالة الملك محمد السادس في الرسالة السامية التي وجهها إلى المشاركين في الدورة 23 للجنة التراث العالمي في 27 مارس، 2013 ، "أن المحافظة على التراث المحلي والوطني وصيانته إنما هما محافظة على إرث إنساني يلتقي عنده باعتراف متبادل جميع أبناء البشرية".وقد طالبت جمعية التضامن للتنمية والشراكة بمدينة المنزل بإقليم صفرو، دون الحصول على رد، بتخصيص ميزانية لترميم هذه الدار التاريخية وتحويلها إلى مؤسسة ثقافية لخدمة ساكنة المنطقة وشبابها بخلق دينامية ثقافية لتكون منارة للأجيال القادمة.وعليه، نسائلكم السيد الوزير المحترم، ماهي الاجراءات التي ستتخذها وزارتكم قصد ترميم وتأهيل معلمة دار القايد العربي بمدينة المنزل بإقليم صفرو وتحويلها إلى مؤسسة ثقافية في إطار عدالة مجالية.'},...]

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.