No project description provided

Project description

OpusCleaner

OpusCleaner is a machine translation/language model data cleaner and training scheduler. The Training scheduler has moved to OpusTrainer.

Cleaner

The cleaner bit takes care of downloading and cleaning multiple different datasets and preparing them for translation.

opuscleaner-clean --parallel 4 data/train-parts/dataset.filter.json | gzip -c > clean.gz

Installation for cleaning

If you just want to use OpusCleaner for cleaning, you can install it from PyPI, and then run it

pip3 install opuscleaner

opuscleaner-server

Then you can go to http://127.0.0.1:8000/ to show the interface.

You can also install and run OpusCleaner on a remote machine, and use SSH local forwarding (e.g. ssh -L 8000:localhost:8000 you@remote.machine) to access the interface on your local machine.

Dependencies

(Mainly listed as shortcuts to documentation)

- FastAPI as the base for the backend part.

- Pydantic for conversion of untyped JSON to typed objects. And because FastAPI automatically supports it and gives you useful error messages if you mess up things.

- Vue for frontend

Screenshots

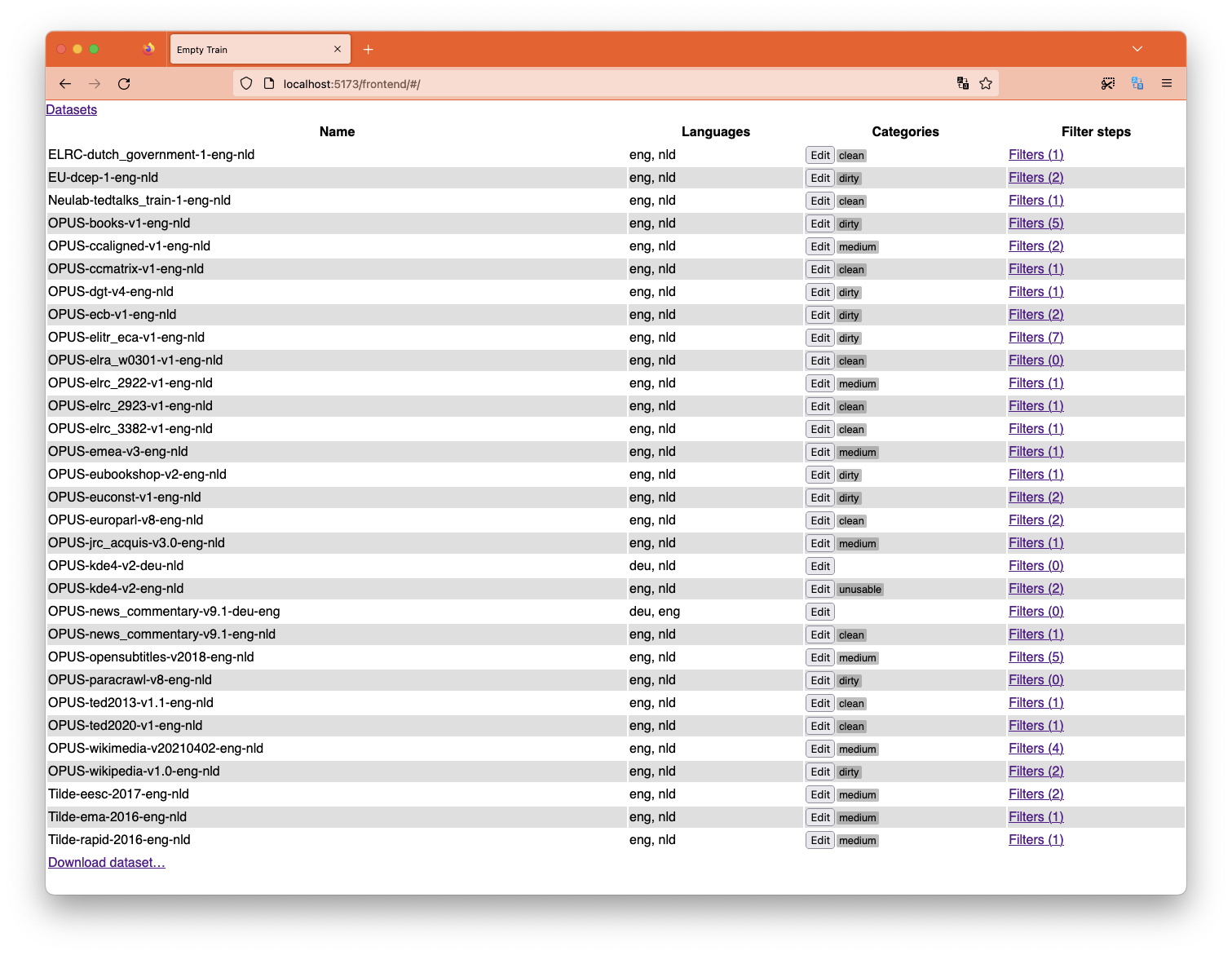

List and categorize the datasets you are going to use for training.

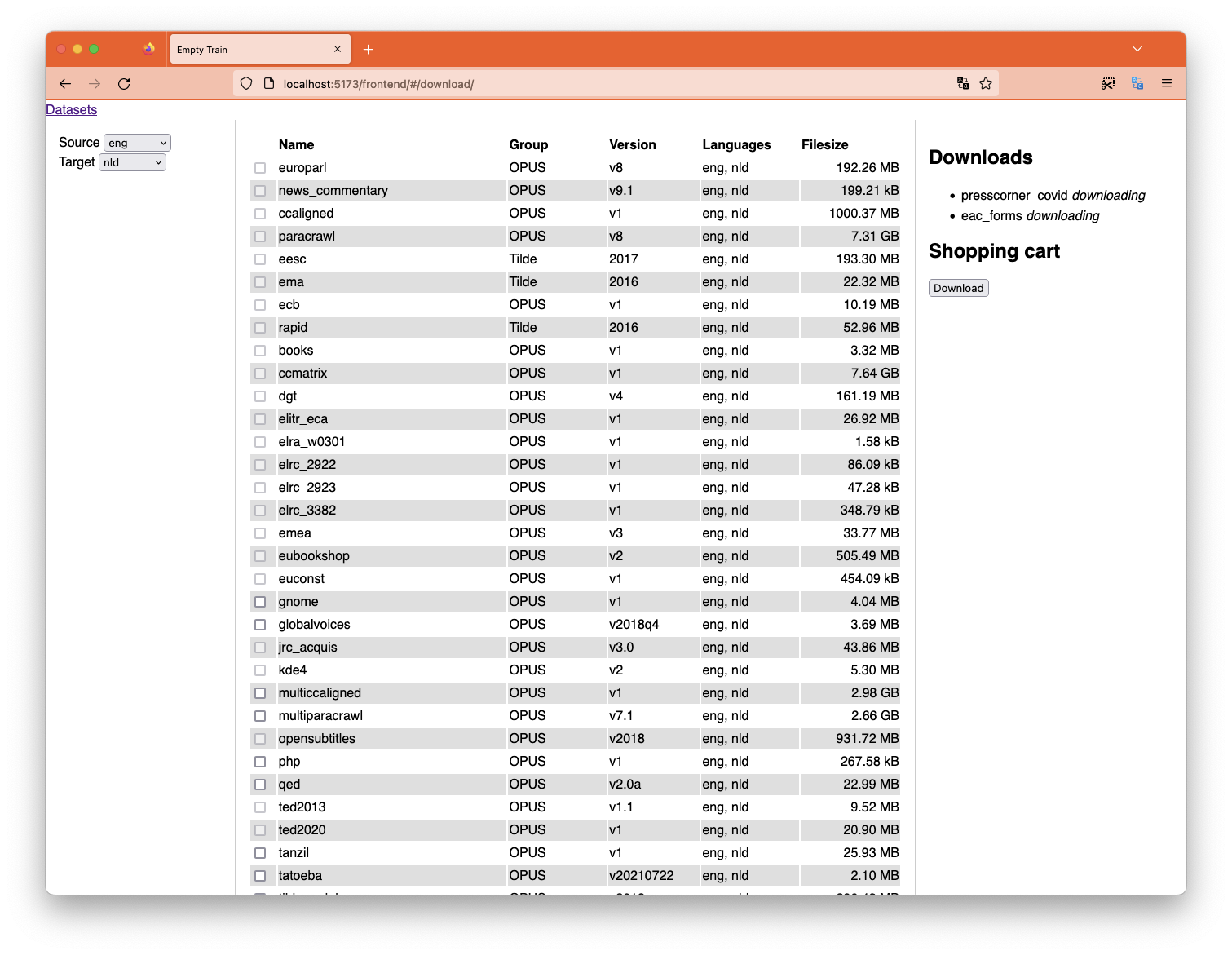

Download more datasets right from the interface.

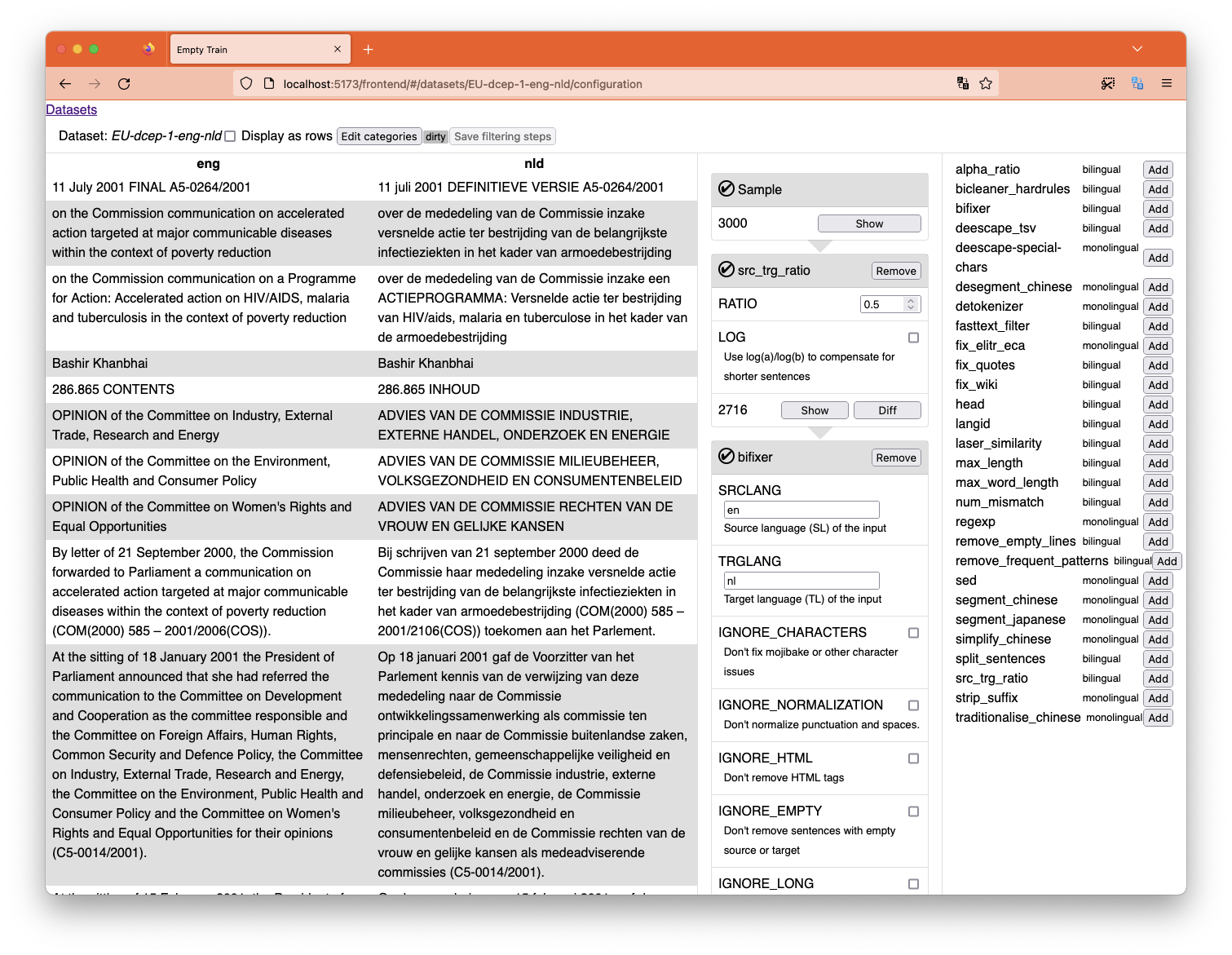

Filter each individual dataset, showing you the results immediately.

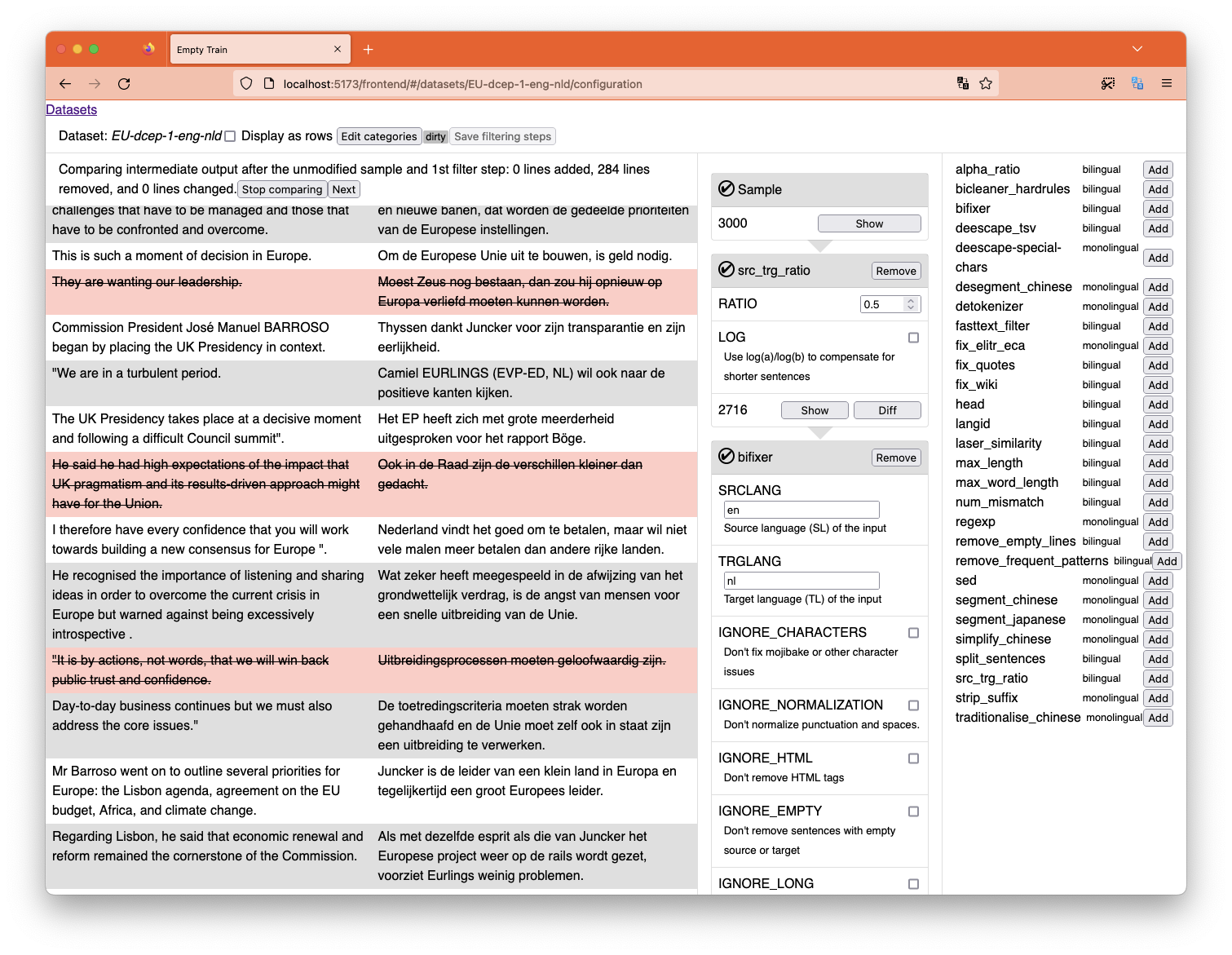

Compare the dataset at different stages of filtering to see what the impact is of each filter.

Using your own data

OpusCleaner scans for datasets and finds them automatically if they're in the right format. When you download OPUS data, it will get converted to this format, and there's nothing stopping you from adding your own in the same format.

By default, it scans for files matching data/train-parts/*.*.gz and will derive which files make up a dataset from the filenames: name.en.gz and name.de.gz will be a dataset called name. The files are your standard moses format: a single sentence per line, and each Nth line in the first file will match with the Nth line of the second file.

When in doubt, just download one of the OPUS datasets through OpusCleaner, and replicate the format for your own dataset.

If you want to use another path, you can use the DATA_PATH environment variable to change it, e.g. run DATA_PATH="./my-datasets/*.*.gz" opuscleaner-server.

Paths

data/train-partsis scanned for datasets. You can change this by setting theDATA_PATHenvironment variable, the default isdata/train-parts/*.*.gz.filtersshould contain filter json files. You can change theFILTER_PATHenvironment variable, the default is<PYTHON_PACKAGE>/filters/*.json.

Installation for development

For building from source (i.e. git, not anything downloaded from Pypi) you'll need to have node + npm installed.

python3 -m venv .env

bash --init-file .env/bin/activate

pip install -e .

Finally you can run opuscleaner-server as normal. The --reload option will cause it to restart when any of the python files change.

opuscleaner-server serve --reload

Then go to http://127.0.0.1:8000/ for the "interface" or http://127.0.0.1:8000/docs for the API.

Frontend development

If you're doing frontend development, try also running:

cd frontend

npm run dev

Then go to http://127.0.0.1:5173/ for the "interface".

This will put vite in hot-reloading mode for easier Javascript dev. All API requests will be proxied to the python server running in 8000, which is why you need to run both at the same time.

Filters

If you want to use LASER, you will also need to download its assets:

python -m laserembeddings download-models

Packaging

Run npm build in the frontend/ directory first, and then run hatch build . in the project directory to build the wheel and source distribution.

To push a new release to Pypi from Github, tag a commit with a vX.Y.Z version number (including the v prefix). Then publish a release on Github. This should trigger a workflow that pushes a sdist + wheel to pypi.

Acknowledgements

This project has received funding from the European Union’s Horizon Europe research and innovation programme under grant agreement No 101070350 and from UK Research and Innovation (UKRI) under the UK government’s Horizon Europe funding guarantee [grant number 10052546]

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file opuscleaner-0.7.1.tar.gz.

File metadata

- Download URL: opuscleaner-0.7.1.tar.gz

- Upload date:

- Size: 343.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.23

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d7dea15bddc6820cf967f87182cf9a2a9c5bd4440a4c8916be1b3585766dba10

|

|

| MD5 |

70f795b74e295b4283e19b0248529f38

|

|

| BLAKE2b-256 |

9b7175a90a108b1f0fd21d34ab1ae37c831bf746790730a3cf37bdd79f374ee6

|

File details

Details for the file opuscleaner-0.7.1-py3-none-any.whl.

File metadata

- Download URL: opuscleaner-0.7.1-py3-none-any.whl

- Upload date:

- Size: 378.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.23

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

3d114849880cfa1e1c648c89ab9e389462bead53be192c484c867d0939eca22c

|

|

| MD5 |

88e799443d17a6ecde27a08bafa4fb0e

|

|

| BLAKE2b-256 |

d6659b68245ae25cd544459aaaadad26626101f2f962ec5c79ae1ab289097201

|