Multi-Modal Transformers library for Semantic Search and other Vision-Language tasks

Project description

UForm

Pocket-Sized Multi-Modal AI

For Semantic Search & Recommendation Systems

Welcome to UForm, a multi-modal AI library that's as versatile as it is efficient. Imagine encoding text, images, and soon, audio, video, and JSON documents into a shared Semantic Vector Space. With compact custom pre-trained transformer models, all of this can run anywhere—from your server farm down to your smartphone. Check them out on HuggingFace!

🌟 Key Features

⚡ Speed & Efficiency

-

Tiny Embeddings: With just 256 dimensions, our embeddings are lean and fast to work with, making your search operations 1.5-3x quicker compared to other CLIP-like models with 512-1024 dimensions.

-

Quantization Magic: Our models are trained to be quantization-aware, letting you downcast embeddings from

f32toi8without losing much accuracy. Supported by USearch, this leads to a further 3x reduction in index size and up to a 5x higher performance, especially on IoT devices with low floating-point performance.

🌍 Global Reach

- Balanced Training: Our models are cosmopolitan, trained on a uniquely balanced diet of English and other languages. This gives us an edge in languages often overlooked by other models, from Hebrew and Armenian to Hindi and Arabic.

🎛 Versatility

-

Mid-Fusion: Our models use mid-fusion to align multiple transformer towers, enabling database-like operations on multi-modal data.

-

Bi-Modal Features: Thanks to mid-fusion, our models can produce combined vision & language features, perfect for recommendation systems.

-

Cheap Inference: Our models have under 1 Billion parameters, meaning substantially higher throughput and lower inference costs than even tiny models, like the famous

distilbert. -

Hardware Friendly: Whether it's CoreML, ONNX, or specialized AI hardware like Graphcore IPUs, we've got you covered.

🎓 Architectural Improvements

Inspired by the ALBEF paper by Salesforce, we've pushed the boundaries of pre-training objectives to squeeze more language-vision understanding into smaller models. Some UForm models were trained on just 4 million samples across 10x consumer-grade GPUs — a 100x reduction in both dataset size and compute budget compared to OpenAI's CLIP. While they may not be suited for zero-shot classification tasks, they are your go-to choice for processing large image datasets or even petabytes of video frame-by-frame.

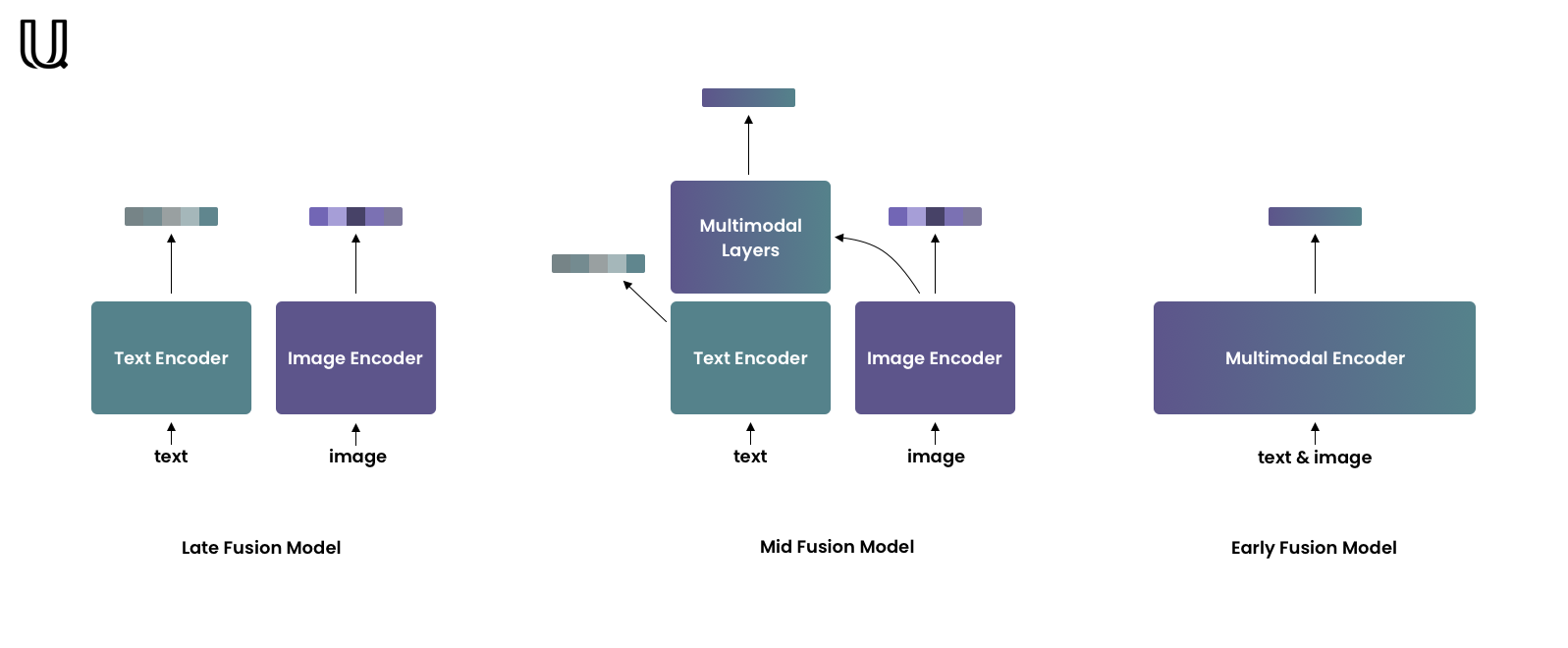

Mid-Fusion

-

Late-Fusion Models: Great for capturing the big picture but might miss the details. Ideal for large-scale retrieval. OpenAI CLIP is one of those.

-

Early-Fusion Models: These are detail-oriented models that capture fine-grained features. They're usually employed for re-ranking smaller retrieval results.

-

Mid-Fusion Models: The balanced diet of models. They offer an unimodal and a multimodal part, capturing both the forest and the trees. The multimodal part enhances the unimodal features with a cross-attention mechanism.

Broad Training Objectives

We adopt the following training objectives, in line with methodologies presented in the ALBEF and ViCHA papers:

- Image-Text Matching (ITM): Uses a loss function to gauge how well the image complements the text.

- Masked Language Modeling (MLM): Stacked on the multimodal encoder to improve language understanding.

- Hierarchical Image-Text Contrastive (H-ITC): Compares representations across layers for better alignment.

- Masked Image Modeling (SSL): Applied to the image encoder to enhance visual data interpretation.

🛠 Installation

Install UForm via pip:

pip install uform

Note: For versions below 0.3.0, dependencies include

transformersandtimm. Newer versions only require PyTorch and utility libraries. For optimal performance, use PyTorch v2.0.0 or above.

🚀 Quick Start

Loading a Model

import uform

model = uform.get_model('unum-cloud/uform-vl-english') # Just English

model = uform.get_model('unum-cloud/uform-vl-multilingual-v2') # 21 Languages

The multi-lingual model is much heavier due to a 10x more extensive vocabulary.

So, if you only expect English data, take the former for efficiency.

You can also load your Mid-fusion model.

Just upload it on HuggingFace and pass the model name to get_model.

Encoding Data

from PIL import Image

text = 'a small red panda in a zoo'

image = Image.open('red_panda.jpg')

image_data = model.preprocess_image(image)

text_data = model.preprocess_text(text)

image_embedding = model.encode_image(image_data)

text_embedding = model.encode_text(text_data)

joint_embedding = model.encode_multimodal(image=image_data, text=text_data)

Retrieving Features

image_features, image_embedding = model.encode_image(image_data, return_features=True)

text_features, text_embedding = model.encode_text(text_data, return_features=True)

These features can later be used to produce joint multimodal encodings faster, as the first layers of the transformer can be skipped. Those might be useful for re-ranking search results, and recommendation systems.

joint_embedding = model.encode_multimodal(

image_features=image_features,

text_features=text_features,

attention_mask=text_data['attention_mask']

)

Graphcore IPUs

To run on Graphcore IPUs, you must set up PopTorch first. Follow the user guide on their website. Once complete, our example would need a couple of adjustments to best leverage the Graphcore platform's available data and model-parallelism.

import poptorch

from PIL import Image

options = poptorch.Options()

options.replicationFactor(1)

options.deviceIterations(4)

model = get_model_ipu('unum-cloud/uform-vl-english').parallelize()

model = poptorch.inferenceModel(model, options=options)

text = 'a small red panda in a zoo'

image = Image.open('red_panda.jpg')

image_data = model.preprocess_image(image)

text_data = model.preprocess_text(text)

image_data = image_data.repeat(4, 1, 1, 1)

text_data = {k: v.repeat(4, 1) for k,v in text_data.items()}

image_features, text_features = model(image_data, text_data)

Cloud API

You can also use our larger, faster, better proprietary models deployed in optimized cloud environments. For that, please choose the cloud of liking, search the marketplace for "Unum UForm", and reinstall UForm with optional dependencies:

$ pip install uform[remote]

model = uform.get_client('0.0.0.0:7000')

The only thing that changes after that is calling get_client with the IP address of your instance instead of using get_model for local usage.

Please, join our Discord for early access!

📊 Models

Architecture

| Model | Language Tower | Image Tower | Multimodal Part | Languages | URL |

|---|---|---|---|---|---|

unum-cloud/uform-vl-english |

BERT, 2 layers | ViT-B/16 | 2 layers | 1 | weights.pt |

unum-cloud/uform-vl-multilingual |

BERT, 8 layers | ViT-B/16 | 4 layers | 12 | weights.pt |

unum-cloud/uform-vl-multilingual-v2 |

BERT, 8 layers | ViT-B/16 | 4 layers | 21 | weights.pt |

The multilingual models were trained on a language-balanced dataset. The missing captions were augmented with NLLB, effectively distilling multi-lingual capabilities from a large NMT model into our tiny multi-modal encoder.

Accuracy

Evaluating the unum-cloud/uform-vl-multilingual-v2 model, one can expect the following metrics for text-to-image search, compared against xlm-roberta-base-ViT-B-32 OpenCLIP model.

The @ 1, @ 5, and @ 10 showcase the quality of top-1, top-5, and top-10 search results, compared to human-annotated dataset.

Higher is better.

| Language | OpenCLIP @ 1 | UForm @ 1 | OpenCLIP @ 5 | UForm @ 5 | OpenCLIP @ 10 | UForm @ 10 | Speakers |

|---|---|---|---|---|---|---|---|

| Arabic 🇸🇦 | 22.7 | 31.7 | 44.9 | 57.8 | 55.8 | 69.2 | 274 M |

| Armenian 🇦🇲 | 5.6 | 22.0 | 14.3 | 44.7 | 20.2 | 56.0 | 4 M |

| Chinese 🇨🇳 | 27.3 | 32.2 | 51.3 | 59.0 | 62.1 | 70.5 | 1'118 M |

| English 🇺🇸 | 37.8 | 37.7 | 63.5 | 65.0 | 73.5 | 75.9 | 1'452 M |

| French 🇫🇷 | 31.3 | 35.4 | 56.5 | 62.6 | 67.4 | 73.3 | 274 M |

| German 🇩🇪 | 31.7 | 35.1 | 56.9 | 62.2 | 67.4 | 73.3 | 134 M |

| Hebrew 🇮🇱 | 23.7 | 26.7 | 46.3 | 51.8 | 57.0 | 63.5 | 9 M |

| Hindi 🇮🇳 | 20.7 | 31.3 | 42.5 | 57.9 | 53.7 | 69.6 | 602 M |

| Indonesian 🇮🇩 | 26.9 | 30.7 | 51.4 | 57.0 | 62.7 | 68.6 | 199 M |

| Italian 🇮🇹 | 31.3 | 34.9 | 56.7 | 62.1 | 67.1 | 73.1 | 67 M |

| Japanese 🇯🇵 | 27.4 | 32.6 | 51.5 | 59.2 | 62.6 | 70.6 | 125 M |

| Korean 🇰🇷 | 24.4 | 31.5 | 48.1 | 57.8 | 59.2 | 69.2 | 81 M |

| Persian 🇮🇷 | 24.0 | 28.8 | 47.0 | 54.6 | 57.8 | 66.2 | 77 M |

| Polish 🇵🇱 | 29.2 | 33.6 | 53.9 | 60.1 | 64.7 | 71.3 | 41 M |

| Portuguese 🇵🇹 | 31.6 | 32.7 | 57.1 | 59.6 | 67.9 | 71.0 | 257 M |

| Russian 🇷🇺 | 29.9 | 33.9 | 54.8 | 60.9 | 65.8 | 72.0 | 258 M |

| Spanish 🇪🇸 | 32.6 | 35.6 | 58.0 | 62.8 | 68.8 | 73.7 | 548 M |

| Thai 🇹🇭 | 21.5 | 28.7 | 43.0 | 54.6 | 53.7 | 66.0 | 61 M |

| Turkish 🇹🇷 | 25.5 | 33.0 | 49.1 | 59.6 | 60.3 | 70.8 | 88 M |

| Ukranian 🇺🇦 | 26.0 | 30.6 | 49.9 | 56.7 | 60.9 | 68.1 | 41 M |

| Vietnamese 🇻🇳 | 25.4 | 28.3 | 49.2 | 53.9 | 60.3 | 65.5 | 85 M |

| Mean | 26.5±6.4 | 31.8±3.5 | 49.8±9.8 | 58.1±4.5 | 60.4±10.6 | 69.4±4.3 | - |

| Google Translate | 27.4±6.3 | 31.5±3.5 | 51.1±9.5 | 57.8±4.4 | 61.7±10.3 | 69.1±4.3 | - |

| Microsoft Translator | 27.2±6.4 | 31.4±3.6 | 50.8±9.8 | 57.7±4.7 | 61.4±10.6 | 68.9±4.6 | - |

| Meta NLLB | 24.9±6.7 | 32.4±3.5 | 47.5±10.3 | 58.9±4.5 | 58.2±11.2 | 70.2±4.3 | - |

Lacking a broad enough evaluation dataset, we translated the COCO Karpathy test split with multiple public and proprietary translation services, averaging the scores across all sets, and breaking them down in the bottom section. Check out the

unum-cloud/coco-smrepository for details.

Speed

On RTX 3090, the following performance is expected from uform on text encoding.

| Model | Multi-lingual | Model Size | Speed | Speedup |

|---|---|---|---|---|

bert-base-uncased |

No | 109'482'240 | 1'612 seqs/s | |

distilbert-base-uncased |

No | 66'362'880 | 3'174 seqs/s | x 1.96 |

sentence-transformers/all-MiniLM-L12-v2 |

Yes | 33'360'000 | 3'604 seqs/s | x 2.24 |

sentence-transformers/all-MiniLM-L6-v2 |

No | 22'713'216 | 6'107 seqs/s | x 3.79 |

unum-cloud/uform-vl-multilingual-v2 |

Yes | 120'090'242 | 6'809 seqs/s | x 4.22 |

🧰 Additional Tooling

There are two options to calculate semantic compatibility between an image and a text: Cosine Similarity and Matching Score.

Cosine Similarity

import torch.nn.functional as F

similarity = F.cosine_similarity(image_embedding, text_embedding)

The similarity will belong to the [-1, 1] range, 1 meaning the absolute match.

Pros:

- Computationally cheap.

- Only unimodal embeddings are required. Unimodal encoding is faster than joint encoding.

- Suitable for retrieval in large collections.

Cons:

- Takes into account only coarse-grained features.

Matching Score

Unlike cosine similarity, unimodal embedding is not enough.

Joint embedding will be needed, and the resulting score will belong to the [0, 1] range, 1 meaning the absolute match.

score = model.get_matching_scores(joint_embedding)

Pros:

- Joint embedding captures fine-grained features.

- Suitable for re-ranking - sorting retrieval results.

Cons:

- Resource-intensive.

- Not suitable for retrieval in large collections.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.